|

Sophia Gunluk I'm a first year Ph.D. student studying Computer Science at Mila, the Quebec Artificial Intelligence Institute, and Université de Montréal in Montréal (DIRO), supervised by Dhanya Sridhar. I previously studied at Cornell University's College of Engineering, where I graduated in 2022. I recieved a B.S. in Computer Science with minors in Operations Research and Information Engineering (ORIE) and Philosophy. |

|

ResearchMy general research interests are in the field of causality and algorithmic fairness. Specifically, my current research is focused on applying causal inference tools to design fair decision models while considering long-term effects. Machine Learning (ML) has been increasingly used to automate human tasks including decision-making in high-stakes environments such as credit lending, college admissions, medicine, and criminal justice. Thus, understanding such ML models becomes extremely vital as they can have both short-term and long-term impacts on society. I am also interested in causal abstraction, which aims to design high-level systems that capture the causal dynamics of lower-level systems. . |

|

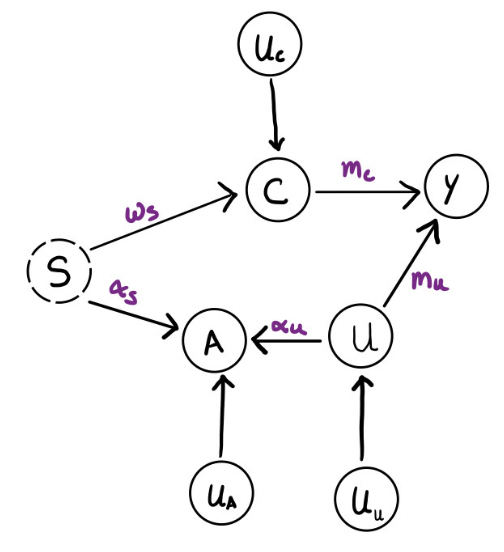

Equal Opportunity under Performative Effects

, Antonio Gois, Dhanya Sridhar, Simon Lacoste-Julien Algorithmic Fairness Through Time (AFT) Workshop at NeurIPS 2023 Machine Learning (ML) has been increasingly used to automate human tasks including decision-making in high-stakes environments such as credit lending, college admissions, medicine, and criminal justice. Thus, understanding such ML models becomes extremely vital as they can have both short-term and long-term impacts on society. In this extended abstract and poster, we discuss first steps towards understanding what causal models lead learned decision models to be biased based on unobserved, but potentially confounding, sensative attributes. |

|

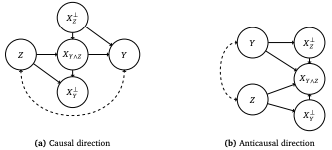

Counterfactual Invariance and Fairness

Tejas Vaidhya, Dhanya Sridhar Causal Inference course project, Winter '23 In machine learning, spurious correlations can hinder accurate modeling of the relationship between inputs and outcomes. To address this issue, stress tests involving data perturbations can be used to detect such correlations. This project extends previous work on counterfactual invariance and stress tests by applying them to address algorithmic bias when training on the COMPAS dataset, emphasizing the importance of understanding the true causal structure of the data and using appropriate regularizers. |

|

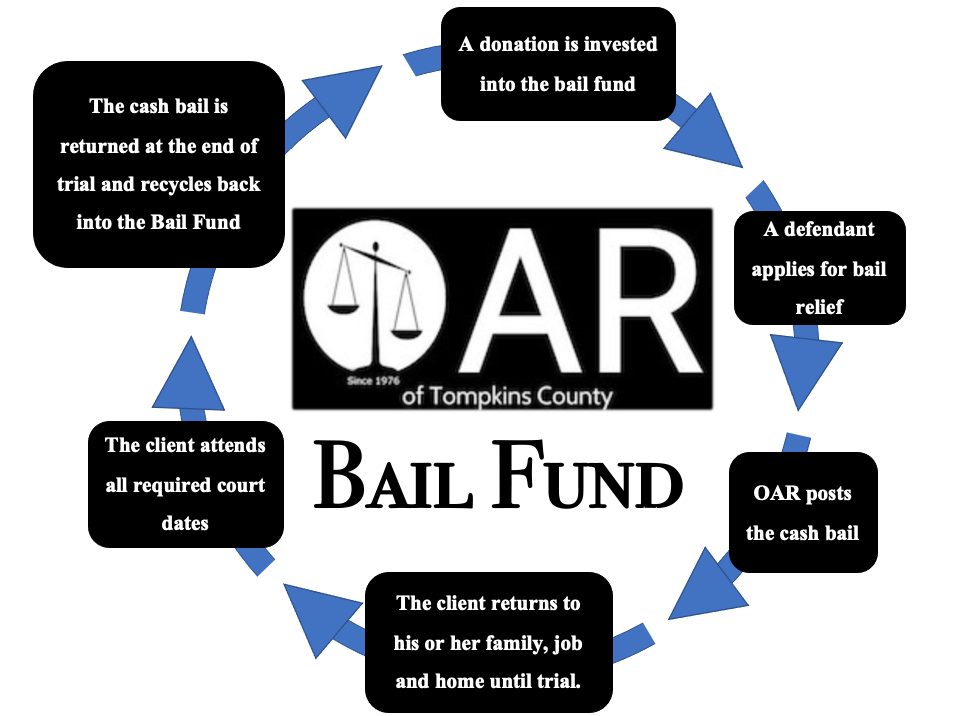

Simulating Justice: Simulation of Stochastic Models for Community Bail Funds

Jamol Pender, Yidan Zhang INFORM Winter Simulation Conference (WSC) 2023 Bail is a nororiously classist part of the U.S. legal system, as defendents essentially have to buy their freedom before trial. Pre-trial detention can have different personal costs depending on one's socioeconomic background, which can impact the verdict. Bail funds have a long history of helping those who cannot afford bail in order to wait for trial at home In this paper, we describe the first stochastic model for capturing the dynamics of a community bail fund., by integrating traditional queueing models with classic insurance/risk models to represent the bail fund’s intricate dynamics. |